def generic_ml_model(x, y, treatment, model, n_split=10, n_group=5, cluster_id=None):

nobs = x.shape[0]

blp = np.zeros((n_split, 2))

blp_se = blp.copy()

gate = np.zeros((n_split, n_group))

gate_se = gate.copy()

baseline = np.zeros((nobs, n_split))

cate = baseline.copy()

lamb = np.zeros((n_split, 2))

for i in range(n_split):

main = np.random.rand(nobs) > 0.5

rows1 = ~main & (treatment == 1)

rows0 = ~main & (treatment == 0)

mod1 = base.clone(model).fit(x.loc[rows1, :], (y.loc[rows1]))

mod0 = base.clone(model).fit(x.loc[rows0, :], (y.loc[rows0]))

B = mod0.predict(x)

S = mod1.predict(x) - B

baseline[:, i] = B

cate[:, i] = S

ES = S.mean()

## BLP

# assume P(treat|x) = P(treat) = mean(treat)

p = treatment.mean()

reg_df = pd.DataFrame(dict(

y=y, B=B, treatment=treatment, S=S, main=main, excess_S=S-ES

))

reg = smf.ols("y ~ B + I(treatment-p) + I((treatment-p)*(S-ES))", data=reg_df.loc[main, :])

reg_fit = reg.fit()

blp[i, :] = reg_fit.params.iloc[2:4]

blp_se[i, :] = get_treatment_se(reg_fit, cluster_id, main)[2:]

lamb[i, 0] = reg_fit.params.iloc[-1]**2 * S.var()

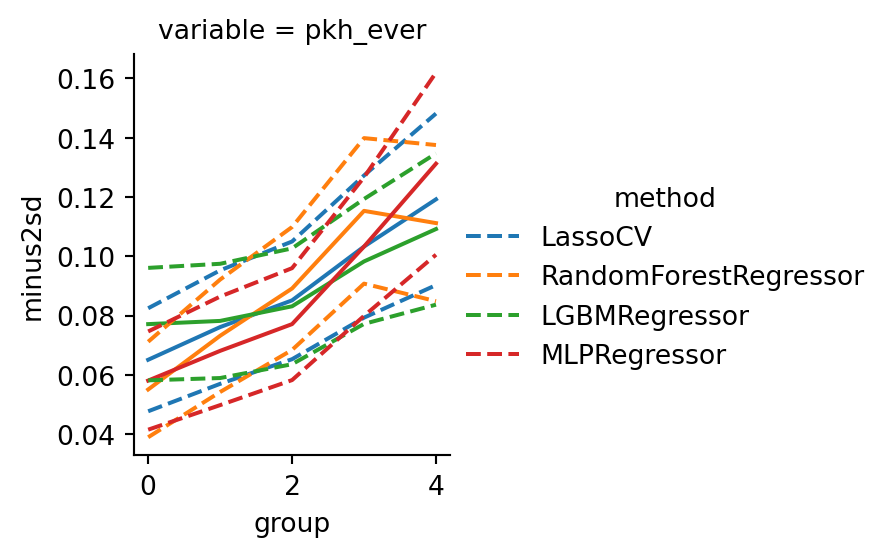

## GATEs

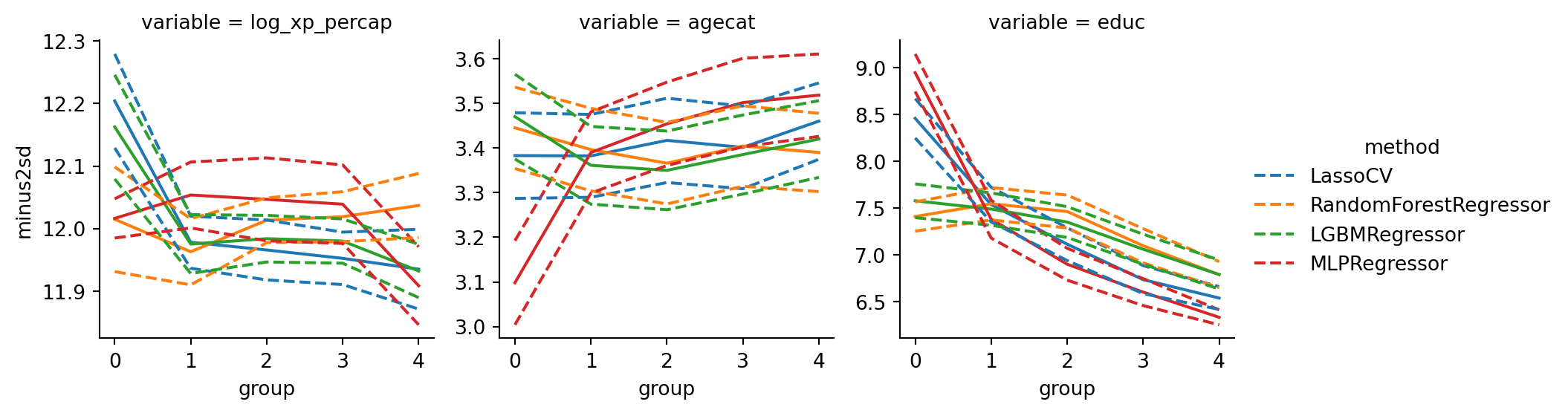

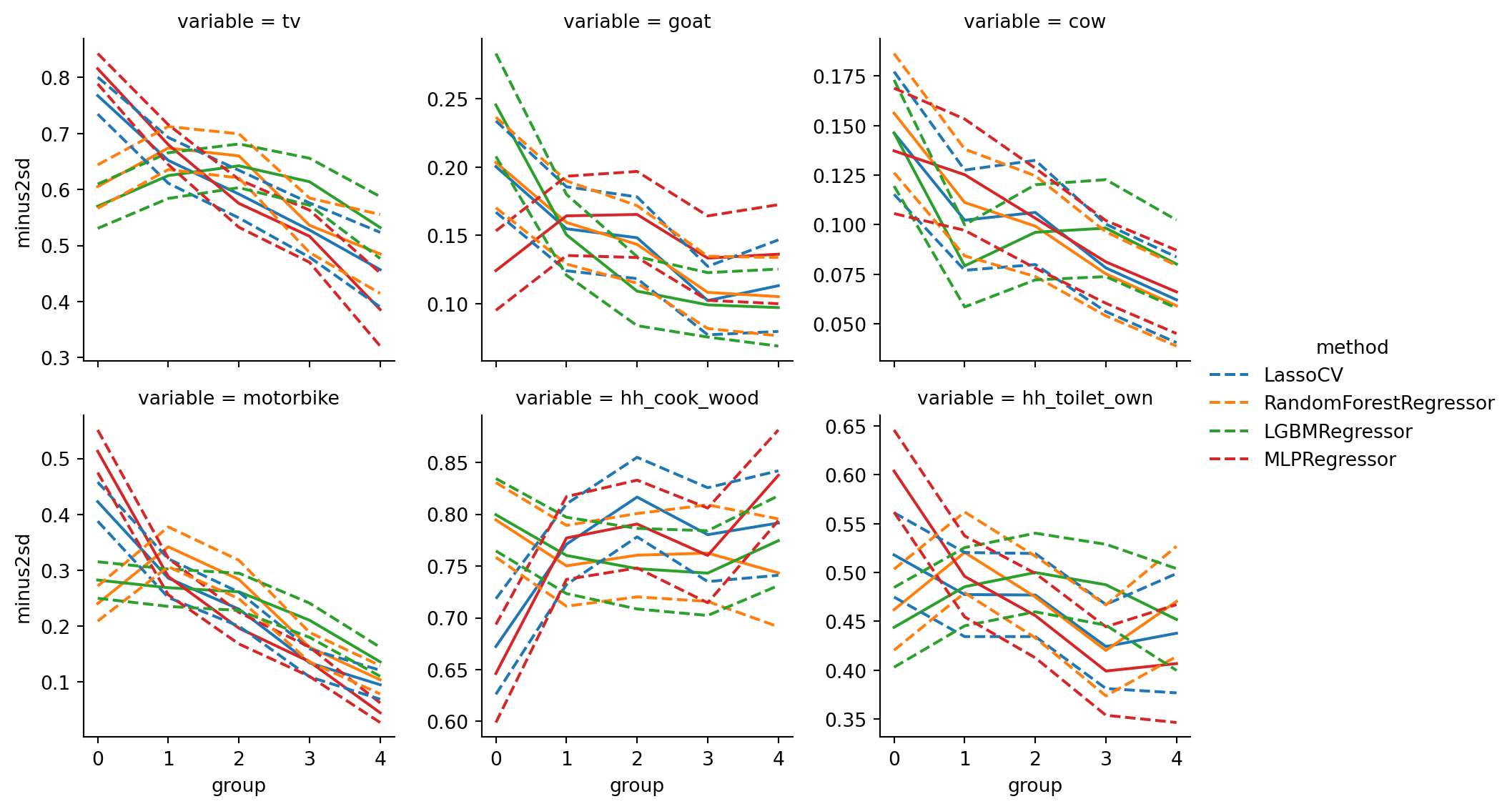

cutoffs = np.quantile(S, np.linspace(0,1, n_group + 1))

cutoffs[-1] += 1

for k in range(n_group):

reg_df[f"G{k}"] = (cutoffs[k] <= S) & (S < cutoffs[k+1])

g_form = "y ~ B + " + " + ".join([f"I((treatment-p)*G{k})" for k in range(n_group)])

g_reg = smf.ols(g_form, data=reg_df.loc[main, :])

g_fit = g_reg.fit()

gate[i, :] = g_fit.params.values[2:] #g_fit.params.filter(regex="G").values

gate_se[i, :] = get_treatment_se(g_fit, cluster_id, main)[2:]

lamb[i, 1] = (gate[i,:]**2).sum()/n_group

out = dict(

gate=gate, gate_se=gate_se,

blp=blp, blp_se=blp_se,

Lambda=lamb, baseline=baseline, cate=cate,

name=type(model).__name__

)

return out

def generic_ml_summary(generic_ml_output):

out = {

x: np.nanmedian(generic_ml_output[x], axis=0)

for x in ["blp", "blp_se", "gate", "gate_se", "Lambda"]

}

out["name"] = generic_ml_output["name"]

return out